We need to talk about the National Academy of Sciences

Last week, the President of the National Academy of Sciences (NAS) came out with an opinion article in PNAS (operated by the NAS). The opinion is “Plan S falls short for society publishers—and for the researchers they serve.”

Missing from the opinion was an outline of what NAS has to gain if “Plan S” is not fulfilled. And while it went into some detail on how, in general, societies would lose out with “Plan S” (with arguably questionable math), it didn’t explain why the NAS in particular fears it would have losses. A look at its financial statements shows an organization that is grossly overspending on non-charitable activities, even when benchmarked against similar non-profits. Out of every $1 the NAS spends, only $0.17 is going back out to grants and similar (non-research/mission related overheads are the bulk of remaining expenses - see figure 1). While the Gates Foundation gives back $0.84 of every dollar spent, and Wellcome Trust (UK) returns $0.71 of every dollar equivalent spent.

Of these three organizations, only the latter two (Gates and Wellcome) support PlanS and Open Access. One really has to wonder why the huge discrepancy in charitable spending, and whether there is a connection to the support for or against Open Access and PlanS. Taken to the extreme, if PlanS forced the NAS to close down (unlikely event, of course), then more money would in theory be available for research grants, as more efficient charities could take charge.

It feels like blasphemy to be saying that research could possibly be more well off if the National Academy of Sciences ceased to operate. It feels like heresy, because unlike most non-profits, the NAS was formed under a congressional charter. Other than its beginnings, the NAS is no different than other non-profits, however. It is independent of congress, just like all other corporations and non-profits. A congressional charter confers no special privilege or status, it is merely symbolic. Congressional charters for new non-profits were in fact stopped several decades ago. The NAS, like all other non-profits, has no official governmental function in the US, although the US government can and does call up the NAS for scientific direction (the US does this with other for-profits and non-profits as well).

What the congressional charter’s designation, history and naming has uniquely conferred upon the NAS is a legacy halo-effect with little oversight into how well it is operating internally. Like many bloated non-profits, it has painted itself into a corner where the cost of overheads and legacy spending habits has removed any ability to look to the future. It has become the proverbial deer in headlights too afraid to progress; Open Access and PlanS are the cars driving into the future about to run it over.

If the NAS fears it cannot survive this future, then what in the hell is it good for and what should be done about it? The problem seems to be the poorly ran NAS, rather than Open Access. The NAS looks like it is barely capable of surviving any environmental changes that it faces going forward. This is before we get into the irony of an organization with the mission of “furthering science in America,” and yet uses its own publications and platform that others will look to (without questioning) to shout down Open Access and other “challenges” it fears.

When the NAS says it has concerns about Open Access and PlanS, what it really means is that its OWN tenuous financial stability is being threatened. This is not the same as threatening all of science, as we see with Wellcome, Gates, and other organizations that are able to thrive. The NAS mission also states that it is supposed to provide “objective advice to the nation on matters related to science.” If the NAS has become financially compromised, can we really count on it to remain “objective?”

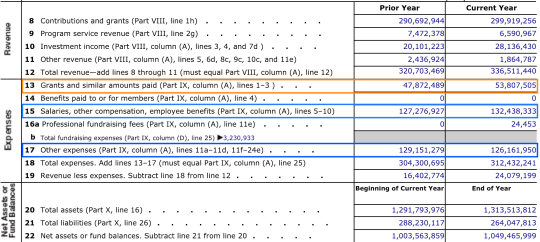

Figure 1 National Academy of Sciences financials (Form 990 2016 most recent). Previous years are similar in terms of proportion of expenditures towards various activities.

The NAS had a total expenditure of $312.4M (line 19) in 2016 from the most recent available financials. Of this amount, $53.8M was spent on grants and other charitable activities (line 13). This amounts to $0.17 of each dollar spent going towards grants, etc (or $0.14 of each revenue dollar). Meanwhile $132.4M was spent directly on salaries and benefits (or $0.42 of each dollar spent). Line 17 shows “other expenses” totaling $126M. That sum includes $19.95M in travel expenses, $18.3M in “occupancy” or building rents, $13.2M on IT and so on. Only $8.4M went towards “Conferences, conventions, and meetings.”

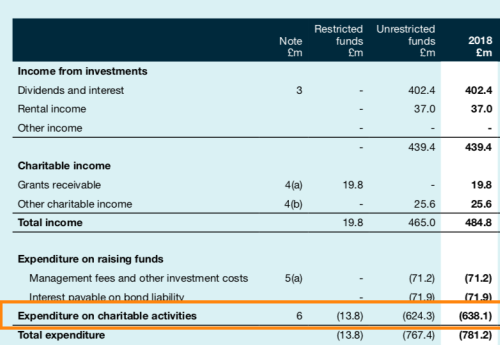

Figure 2 Wellcome Trust financials for 2018

Digging into Wellcome’s expenditures the available financials are more recent, 2018. Wellcome spent £781.2M in 2018, of which £638.1M went toward grants and research, etc. Of that £638M, £86.2M was allocated for support, which includes all overheads (salaries, travel, rents, etc). That left £551.9M for direct charitable activities, or $0.71 per dollar spent.

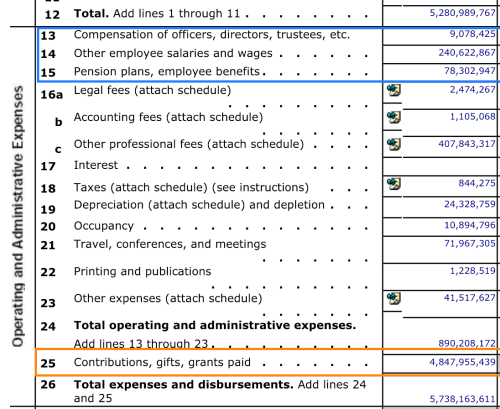

Figure 3 Gates Foundation financials for 2016 (most recent year available)

Above, Figure 3 line 12 shows total revenues for the Gates Foundation of $5.28B (that’s billion) and expenses (line 26) of $5.7B. Of the expenses, $4.847B went toward grants, etc (or $0.84 of every dollar spent).

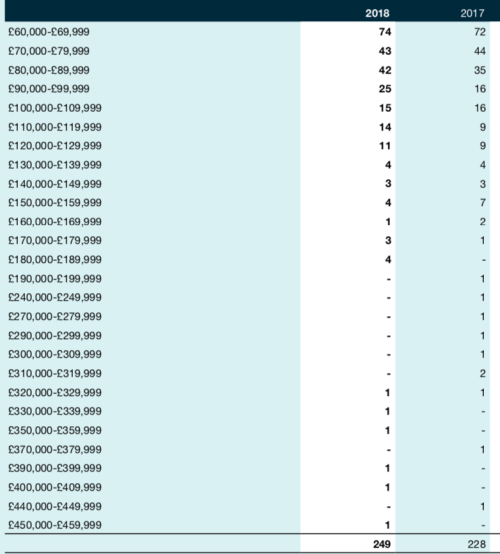

Figure 4 Wellcome Trust employee compensation

I didn’t want to get into individual salaries or compensation in the main paragraphs at the top. First, these are huge organizations and while salaries can be a lot, it isn’t clear that highly paid executives directly contributes towards the massive inefficiencies seen in the NAS. The 15 highest paid executives earned $7.8M in compensation, including $1.1M to the President of NAS. Of total expenditures, that is 2.5% for the highest paid executives ($132.4M for all staff or 42%). The Gates Foundation has similar levels of executive compensation, BUT as a total proportion of expenses its total staff expenditure is only 5.7% of all costs. The inefficiencies at the NAS are seen only when looking at all staff costs.

Meanwhile, figure 4 shows staff compensation (salary, bonus, etc) to Wellcome Trusts employees (it excludes the investment team, which has performance based salaries depending on investment income). Again, executive compensation isn’t the real issue, although the highest paid Wellcome directors are paid just half of their US counterparts at NAS. The real story is the efficiency of total staff expenditures.